Levels of AGI

Following the first principles levels capability levels can be identified and status can be tracked as shown in the figure below:

There are instances where AI has already reached super-human capabilities. An example is AlphaFold, considered a Level 5 Narrow AI ("Superhuman Narrow AI") because it predicts protein structures more accurately than top scientists. While the current chatGPT and BARD conversational AI's perform at competent level in essay and script writing, matching or sometimes better than human expert, but are still "Emerging" in most areas (like math or factuality). Thus, they are classified as Level 1 General AI ("Emerging AGI"). Hence it is most like that AI systems are rated based on how well they perform tasks compared to skilled adults. For example, a "Competent AGI" (Artificial General Intelligence) should perform at or above the 50th percentile of adult humans in most cognitive tasks, but it might excel (Expert, Virtuoso, or even Superhuman levels) in specific areas. Following the journey of AGI within specific domain expertise would be a fascinating concept to follow in the comming years.

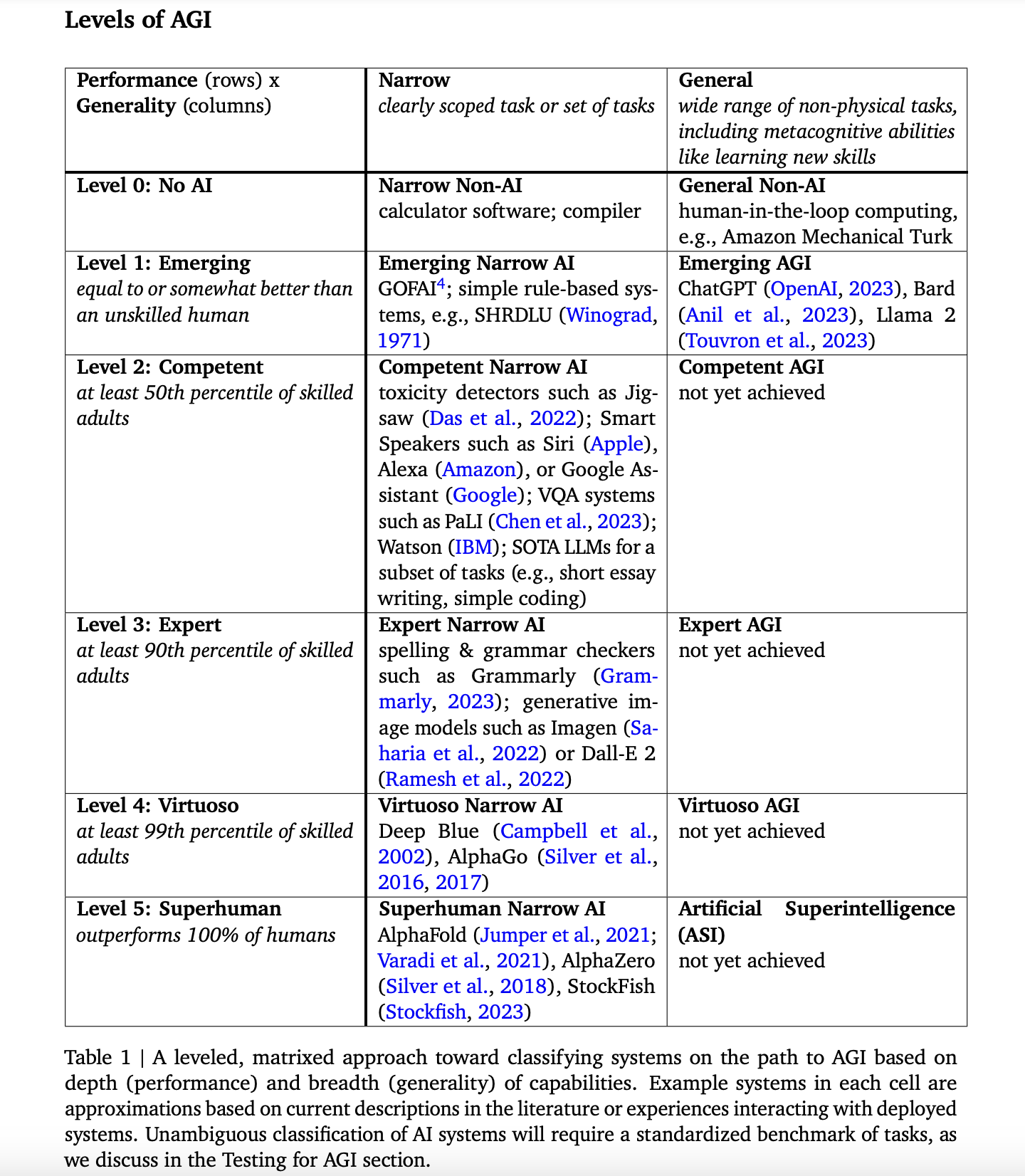

The capability matrix defined by the paper Levels of AGI: Operationalizing Progress on the Path to AGI describes a method for classifying AI systems on their journey to achieving Artificial General Intelligence (AGI). This classification uses a matrix approach based on two main factors:

-Depth (Performance): How well the AI system performs certain tasks.

-Breadth (Generality): The range of different tasks the AI system can perform.

Examples are given for each category in the matrix, drawn from current literature or interactions with existing AI systems. However, the text notes that to classify AI systems clearly and without ambiguity, a standardized set of tasks is necessary. This requirement for a benchmark is further discussed in a section dedicated to testing for AGI. Following the journey of AGI within specific domain expertise would be a fascinating concept to follow in the comming years