What is Causal Model

Unlike a statistical model which aims to model the underlying distribution , a casual model in addition to modelling the underlying distribution should be able to achieve other goals as shown in the diagram below

AGI defined in several ways A recent paper, "Levels of AGI: Operationalizing Progress on the Path to AGI," offers a fascinating exploration of this question of understanding the definition of AGI.

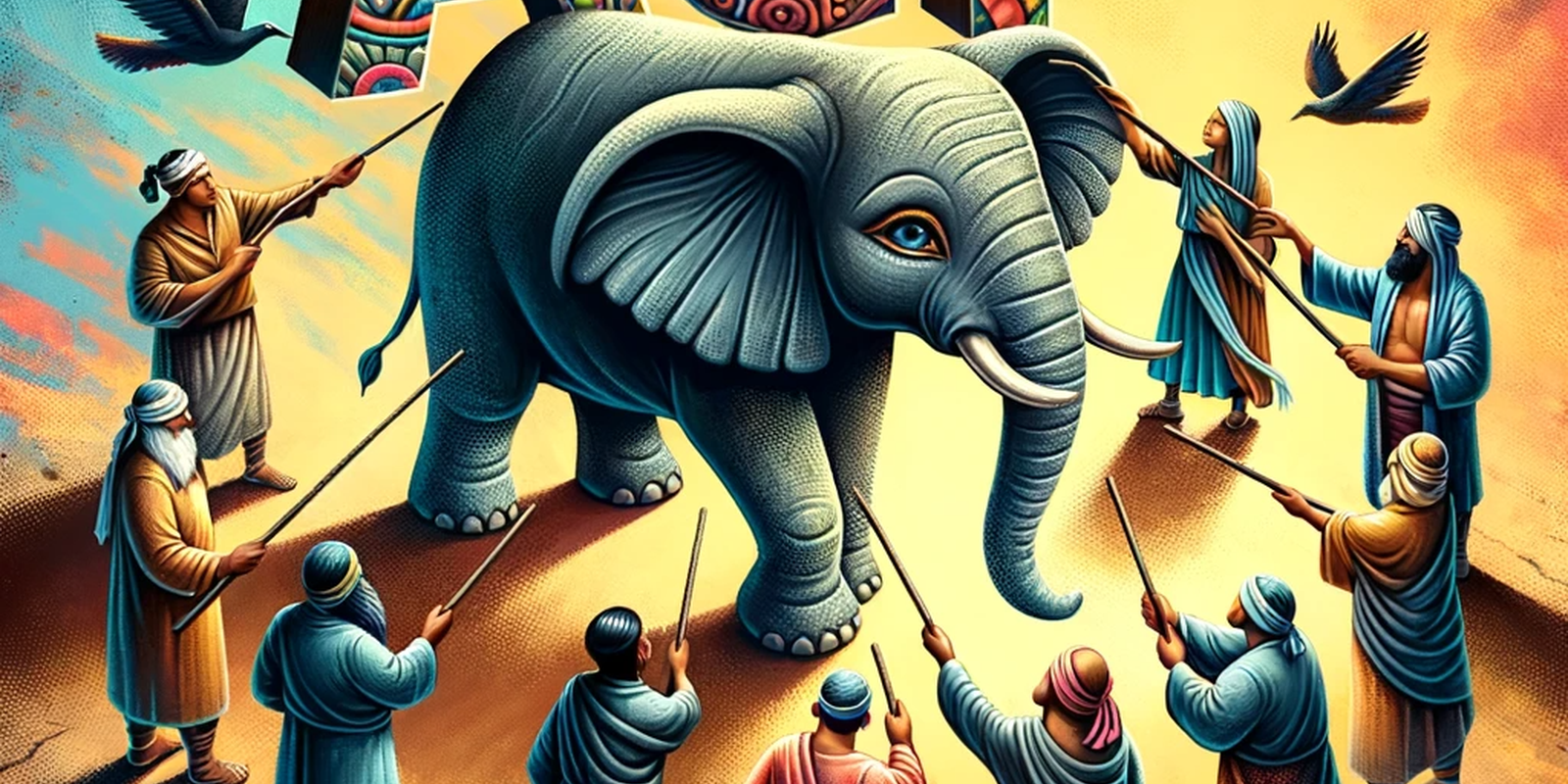

The Complexity of Defining AGI

Previous approaches to define AGI can be well understood by studying the examples or case studies, scientist proposed as a challenge for the computer systems to be generally intelligent. Several attempts were made to define AGI a few notable ones are listed below:

A system which passes Turing test is an AGI:

Developed by Alan Turing in 1950, this test involves a human trying to tell if they are interacting with a machine or another human based on text responses. The test has faced criticism and is seen as insufficient for defining or measuring AGI. Modern language models (LLMs) can sometimes pass the Turing Test, but this doesn't necessarily reflect their "intelligence." The authors suggest focusing on what a machine can do rather than if it can think.

A system which has Strong AI is first stepping stone to AGI:

Strong AI as proposed by philosopher John Searle in 1980. According to Searle, a computer with the right programming can be considered to have understanding and other cognitive states, implying that it possesses consciousness. This idea suggests that Strong AI could be a path to achieving AGI (Artificial General Intelligence). However, there is no scientific consensus on how to determine if machines actually have Strong AI characteristics like consciousness. Due to this lack of agreement on methods to measure these attributes, focusing on process-oriented aspects, like consciousness in machines, is considered impractical for defining or achieving AGI.

A system capability comparable to Human Brain is AGI:

AGI was first mentioned in 1997, described as AI systems that could match or exceed the human brain in complexity and speed, and be used in many different areas. However, modern AI, like transformer-based architectures, shows that AGI doesn't necessarily need to mimic the human brain.

A system achieving Human-Level Performance on Cognitive Tasks is AGI:

Defined as a machine capable of performing cognitive tasks that humans can do, this definition focuses on mental rather than physical tasks, but it's unclear what specific tasks or which people are the benchmarks.

A system which has ability to learn tasks is AGI

AGI is viewed as AI that can learn a wide range of tasks, like humans, highlighting the importance of learning abilities for AGI.

A system capable of economically valuable work is AGI:

OpenAI describes AGI as systems that perform better than humans in most economically valuable work. This definition is measurable but may not cover all aspects of intelligence, like artistic creativity or emotional intelligence.

A system which passes Coffee Test is AGI:

The "Coffee Test" is a specific example of this idea. It's a simple, practical test to see if an AI can perform everyday tasks. For instance, in this test, an AI might be asked to go into an average American house and figure out how to make coffee. This would require the AI to understand the environment (like finding the kitchen, locating the coffee machine), learn how to use new tools (like the coffee machine), and successfully complete the task (making coffee). So, in simple terms, this approach to AGI is about making sure AI can handle a wide range of tasks and situations, learning and adapting as needed, much like a human would.

A system capable of performing complex tasks is AGI:

This concept, proposed by Mustafa Suleyman and Michael Bhaskar, is about AI systems that can perform complex, multi-step tasks in the real world. One test for this is the “Modern Turing Test,” where an AI is given capital and must turn it into a larger sum. This focuses on economic skills but could have risks, like focusing only on making money. The strength of ACI is in performing real-world tasks, similar to the five flexibility and generality tests.

State-of-the-Art Language Models such as ChatGPT is already AGI:

The authors of the paper Artificial General Intelligence is Already Here argue that current advanced language models (like GPT-4) are already AGI because they can discuss many topics, handle different types of inputs and outputs, work in multiple languages, and learn quickly. However, while these models are general, their performance (accuracy and reliability) needs to match their generality to truly be AGI.

In summary, AGI is a complex concept with various proposed definitions and tests. The focus is on whether AI can perform a wide range of tasks, understand complex concepts, and interact effectively in the real world, but there's debate over what exactly constitutes AGI and how to measure it in later sections let us follow the approach to implement and measure the safety and performance of AGI systems.